Mark Zuckerberg, CEO of Meta, today unveiled a new conversational model based on artificial intelligence, Massively Multilingual Speech. According to the announcement, the system is capable of converting speech to text and text to speech, and supports more than 1,100 languages, having been trained in more than 4,000 languages.

Fight against the end of many languages in the world

Many of the world's languages are at risk of disappearing, and the limitations of speech recognition technology and current generations will only accelerate this trend.

Meta says it wants to make it easier for people to access information and use devices in their preferred language, even if it's the least spoken in the world. That's why today I announced a series of artificial intelligence (AI) models that can help people do just that.

The role of polyglot speech paradigms is enormous

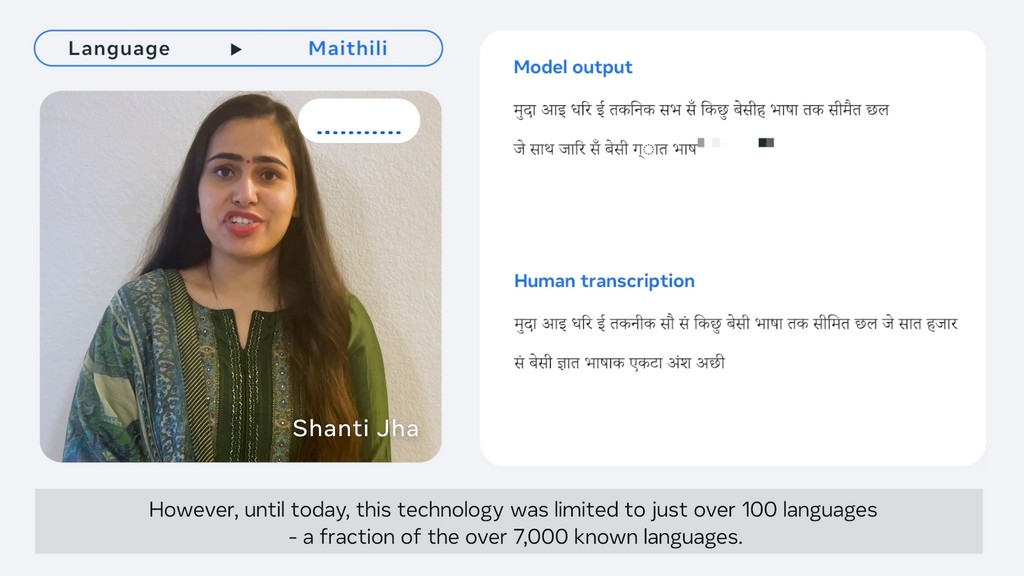

The multilingual speech models (MMS) that the company has already provided in the past, go from supporting text / speech conversion and voice / text conversion to 100 languages to support more than 1100. Now, the system is also able to identify more spoken by more than 4000 languages.

There are also many use cases for speech technology—from virtual and augmented reality technology to messaging services—that can be used in one person's preferred language and can understand everyone's voice.

Meta offers open source models and code so that others in the research community can build on the work and help preserve the world's languages and bring the world closer together.

Through this project, Meta hopes to contribute to the preservation of linguistic diversity in the world.

“Coffee trailblazer. Social media ninja. Unapologetic web guru. Friendly music fan. Alcohol fanatic.”